Efficient Neural Network Compression for Reconfigurable Hardware

Efficient Neural Network Compression for Reconfigurable Hardware

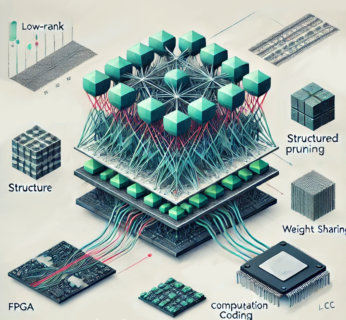

A significant portion of the computational burden in most neural networks arises from constant matrix-vector multiplications (CMVMs). To address this challenge, linear computation coding (LCC) has emerged as a promising approach for approximating CMVMs in large matrices, while also facilitating a hardware-efficient parallel implementation on reconfigurable hardware, such as FPGAs.

However, optimizing CMVMs is just one method for enhancing the efficiency of neural networks. Various compression techniques have been proposed to reduce the number of parameters in neural networks while maintaining prediction accuracy. Several of these methods, when combined with LCC, show great potential for further improving neural network efficiency.

Some of the most promising techniques include:

- Low-rank decompositions

- Structured pruning

- Weight sharing

Should you be interested in exploring any of these topics further, please do not hesitate to contact Hans Rosenberger and Johanna Fröhlich.